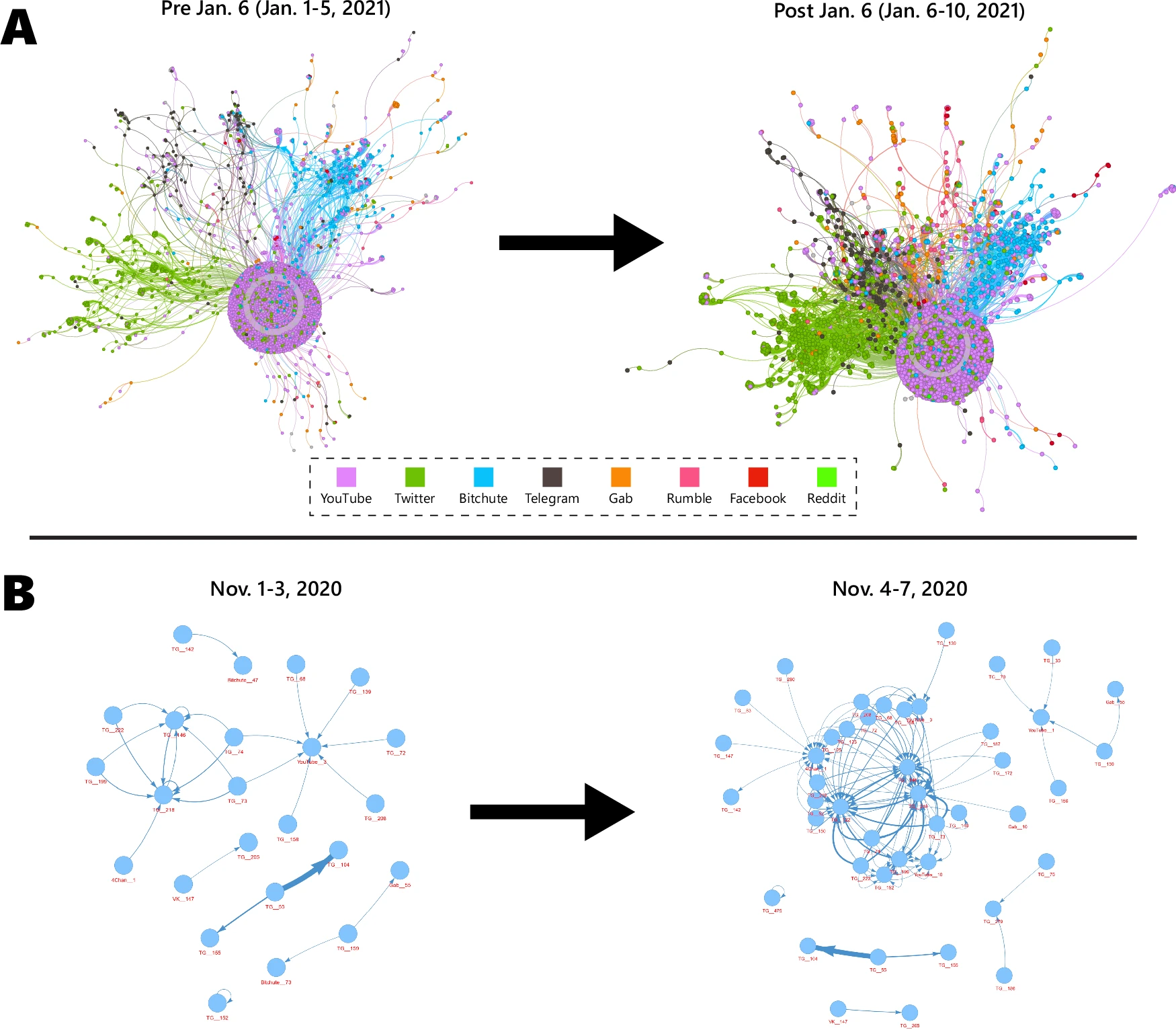

A new study published today details the ways in which the 2020 U.S. election not only incited new hate content in online communities but also how it brought those communities closer together around online hate speech. The research has wider implications for better understanding how the online hate universe multiplies and hardens around local and national events such as elections, and how smaller, less regulated platforms like Telegram play a key role in that universe by creating and sustaining hate content.

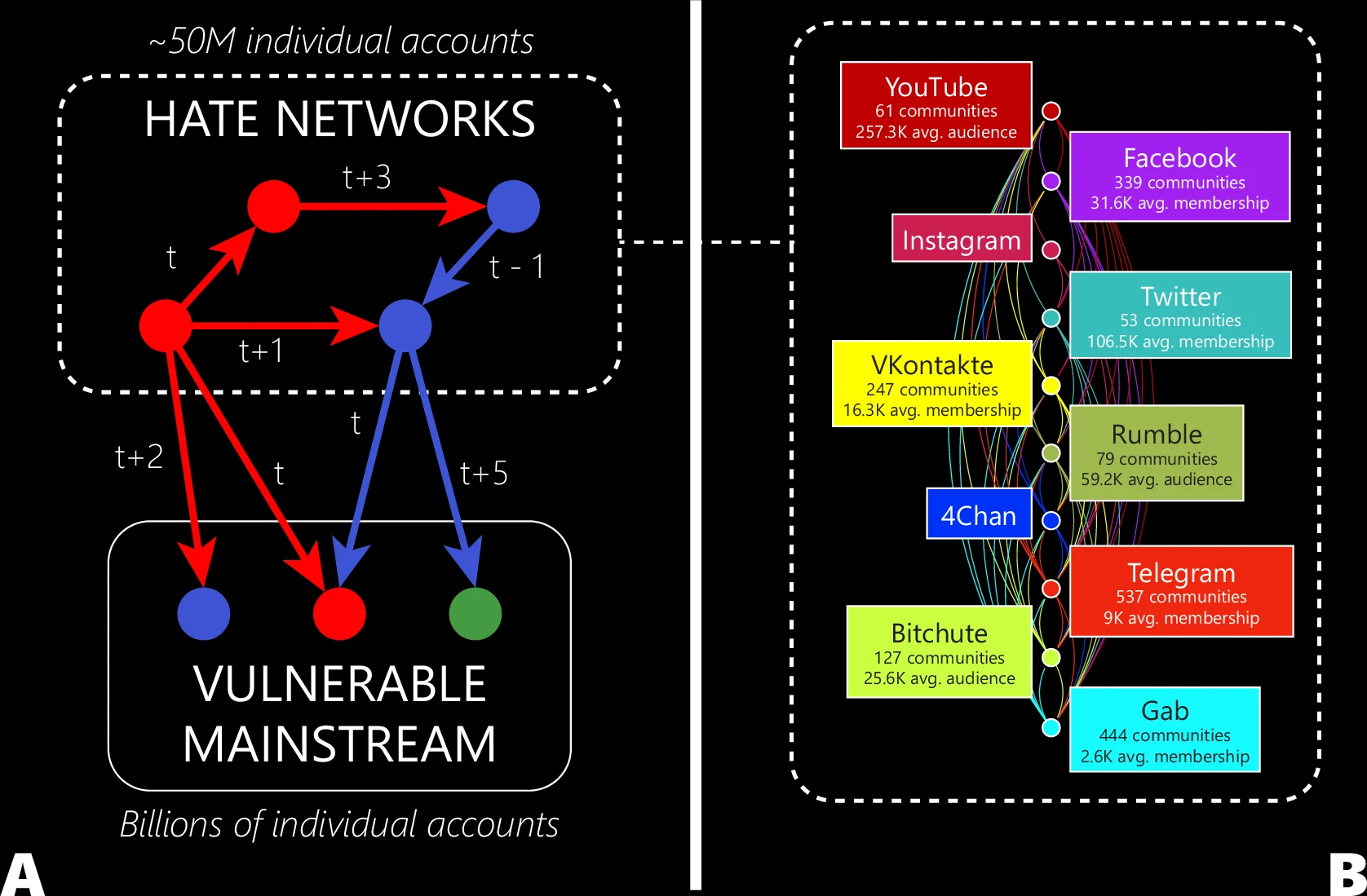

The study – published in the journal npj Complexity, part of the Nature portfolio of journals – found that the 2020 U.S. election drew approximately 50 million accounts in online hate communities closer together and in closer proximity with the broader mainstream, including billions of people.

The research also found the election incited new hate content around specific issues, such as immigration, ethnicity, and antisemitism that often align with far-right conspiracy theories. The research identified a significant uptick in hate speech targeting these three issues around November 7, 2020, when then president-elect Joe Biden was declared the winner in the U.S. presidential race. The team also identified a similar surge in anti-immigration content on and after January 6, 2021.

The research team developed a powerful new tool to take a closer look at the online world and the hate content spreading there. Led by George Washington University physics professor Neil Johnson, GW researchers Rick Sear and Akshay Verma built an ‘online telescope’ that maps the online hate universe at unprecedented scale and resolution.

“Politics can be a catalyst for potentially dangerous hate speech. Combine that with the internet, where hate speech thrives, and that’s an alarming scenario. This is why it’s critical to understand exactly how hate at the individual level multiplies to a collective global scale,” says Johnson. “This research fills in that gap in understanding of how hate evolves globally around local or national events like elections.”

Johnson and the research team found that the social media platform Telegram acts as a central platform of communication and coordination between hate communities; yet, Telegram is often overlooked by U.S. and E.U. regulators, Johnson says

Moving forward, the researchers suggest that current policies focused only on popular platforms – such as the more widely used sites of Facebook, Twitter, or TikTok – will not be effective in curbing hate and other online harms, since various platforms can play different roles in the online hate community. Additionally, they recommend that any anti-hate messaging deployed to combat online hate speech should not be tied specifically to the event itself, since hate speech around real-world events may also incorporate adjacent themes. By only targeting anti-hate messaging around a U.S. election for example, messaging may neglect to reach audiences who are spreading hate speech around issues of immigration, ethnicity, or antisemitism.

The paper, “How U.S. Presidential elections strengthen global hate networks” was published October 29, 2024. The U.S. Air Force Office of Scientific Research and The John Templeton Foundation funded the research.

Leave a Reply