When it comes to making moral decisions, we often think of the golden rule: do unto others as you would have them do unto you. Yet, why we make such decisions has been widely debated. Are we motivated by feelings of guilt, where we don’t want to feel bad for letting the other person down? Or by fairness, where we want to avoid unequal outcomes? Some people may rely on principles of both guilt and fairness and may switch their moral rule depending on the circumstances, according to a Radboud University – Dartmouth College study on moral decision-making and cooperation. The findings challenge prior research in economics, psychology and neuroscience, which is often based on the premise that people are motivated by one moral principle, which remains constant over time. The study was published recently in Nature Communications.

“Our study demonstrates that with moral behavior, people may not in fact always stick to the golden rule. While most people tend to exhibit some concern for others, others may demonstrate what we have called ‘moral opportunism,’ where they still want to look moral but want to maximize their own benefit,” said lead author Jeroen van Baar, a postdoctoral research associate in the department of cognitive, linguistic and psychological sciences at Brown University, who started this research when he was a scholar at Dartmouth visiting from the Donders Institute for Brain, Cognition and Behavior at Radboud University.

“In everyday life, we may not notice that our morals are context-dependent since our contexts tend to stay the same daily. However, under new circumstances, we may find that the moral rules we thought we’d always follow are actually quite malleable,” explained co-author Luke J. Chang, an assistant professor of psychological and brain sciences and director of the Computational Social Affective Neuroscience Laboratory (Cosan Lab) at Dartmouth. “This has tremendous ramifications if one considers how our moral behavior could change under new contexts, such as during war,” he added.

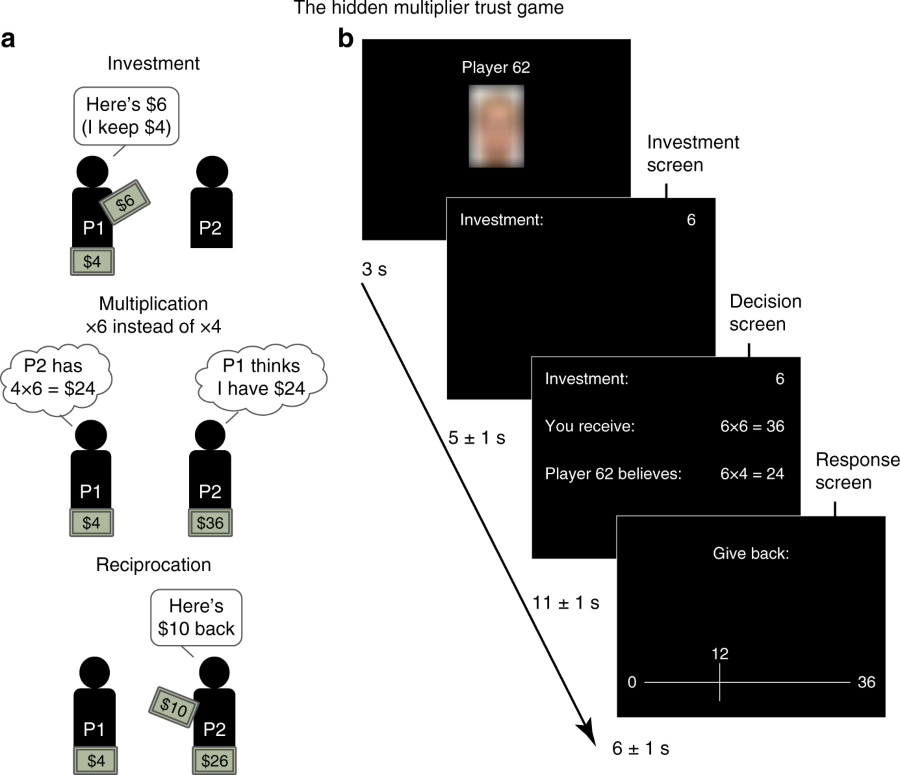

To examine moral decision-making within the context of reciprocity, the researchers designed a modified trust game called the Hidden Multiplier Trust Game, which allowed them to classify decisions in reciprocating trust as a function of an individual’s moral strategy. With this method, the team could determine which type of moral strategy a study participant was using: inequity aversion (where people reciprocate because they want to seek fairness in outcomes), guilt aversion (where people reciprocate because they want to avoid feeling guilty), greed, or moral opportunism (a new strategy that the team identified, where people switch between inequity aversion and guilt aversion depending on what will serve their interests best). The researchers also developed a computational, moral strategy model that could be used to explain how people behave in the game and examined the brain activity patterns associated with the moral strategies.

The findings reveal for the first time that unique patterns of brain activity underlie the inequity aversion and guilt aversion strategies, even when the strategies yield the same behavior. For the participants that were morally opportunistic, the researchers observed that their brain patterns switched between the two moral strategies across different contexts. “Our results demonstrate that people may use different moral principles to make their decisions, and that some people are much more flexible and will apply different principles depending on the situation,” explained Chang. “This may explain why people that we like and respect occasionally do things that we find morally objectionable.”

Leave a Reply